Feature: The New Intelligence

Alex Patterson

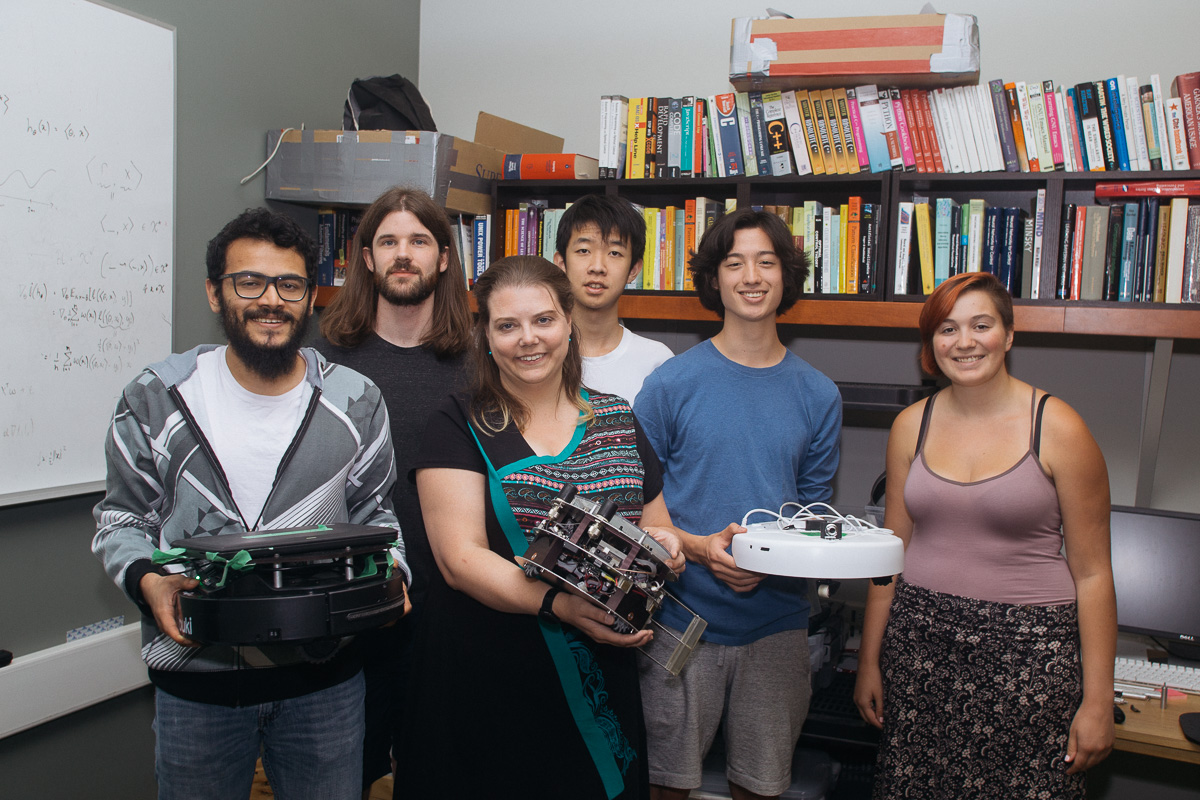

Alex PattersonAnna Koop stands inside a makeshift wooden pen while a Roomba races towards her, turning at the last minute and zooming away.

With a laptop running an artificial intelligence program mounted to its back with green masking tape, and a camera on its front, the Roomba went from slamming into the walls of the pen to turning jerkily to avoid them.

Koop is a PhD student working at the Alberta Machine Intelligence Institute, an umbrella organization for U of A researchers studying AI with a $2 million annual budget for research and commercialization. Her PhD supervisor is Michael Bowling, a computing science professor and head of the university’s Computer Poker Research Institute, which has built some of the best poker-playing AI systems on the planet.

Koop explains that the Roomba doesn’t really have a purpose; it’s a robot that researchers in the Reinforcement Learning and Artificial Intelligence lab, a subset of the institute, can use to explore learning. Whether they want the Roomba to avoid walls, follow a person around a pen, or crash into the boards is up to them.

The research Roomba, referred to as the Robot Learning Project, is an example of reinforcement learning: a subfield of AI where computers aren’t told the right thing to do, but are given positive reinforcements when they do something right. Koop compared it to giving a dog a cookie. By first slamming into the wall, the robot learns through experience to predict when it’s approaching the wall so it can turn away.

The hope is that the robot won’t have to fake being intelligent, unlike the chat bot on a phone.

“This lab has always been about looking into what’s called strong AI — looking into systems that can actually understand the world,” Koop says. “That’s why our big demo is a small robot avoiding a wall.”

Parash Rahman, a fifth-year computing science student, works on the Robot Learning Project as an intern. With other interns and graduate students, he’s been setting up the project to be accessible to researchers who don’t know as much about robotics. He has helped create the wooden pen environment and the tutorial experiment of having the Roomba learn to avoid walls based on what it sees.

Rahman works with computers but, like other people who are drawn to the lab, he’s interested in ideas of learning, thinking, and human nature. He explains that they could have added sensors to the Roomba so it could tell when it’s close to a wall and avoid it, but instead they want to see the computer build knowledge on its own from seemingly random inputs.

“I think the reason we seem so warm is because we understand how other humans sense the world,” Rahman says.

[perfectpullquote align=”full” cite=”” link=”” color=”” class=”” size=””]“If you make a robot that has a robot friend, maybe they can understand each other and maybe that understanding can be classified as human. I think all these things are possible because we exist.”[/perfectpullquote]

While the research being done at the RLAI lab is closer to the realm of “pure” science, and the scientists aren’t really trying to apply it to the real world, the technology running the Roomba around the pen can be found in applied settings like medicine. The tech is used to move the arms, wrists, and fingers of prosthetics for amputees, who face the difficulty of controlling their new bionic limb in the way they would have controlled a real arm.

Alexandra Kearney worked on limbs that mold to a person in real time over a lifetime, learning, supporting, and sometimes executing actions the user is likely to take. Now a PhD student, Kearney worked on AI-powered prosthetics as an undergraduate intern in the Bionic Limbs for Natural Control lab in the Faculty of Medicine, assessing how researchers could use AI and reinforcement learning to adapt to the user.

Kearney also worked on reading electrical signals from the surface of a person’s skin using electrodes to translate them into controls for a prosthetic. That way, muscles flexing become similar to a joystick controlling a video game. Typically, these bionic limbs can only be controlled one joint at a time. The user has to move the elbow to a certain position, then the wrist, and finally a finger. The lab’s goal is to unite those individual movements by creating an artificially intelligent arm that can switch between joints to perform actions that it thinks the user wants to do.

Dylan Brenneis, a mechanical engineering Master’s student who works with Kearney in the BLINC lab, is creating bionic limbs for testing AI algorithms. As an undergraduate student, he worked a co-op term at the lab, and tried to figure out what kind of sensors were needed to get the machine and human on the same page. The hand he built has four position sensors and a camera in the palm. Now for his Master’s project, he’s creating a wrist.

Amputees sometimes come into the BLINC lab to try out the technology and participate in studies.

“When you lose a limb, it’s a very life-changing thing,” Brenneis says. “To be building the technology that’s going to be replacing that is also very personal.”

All the files for the robot arm and hand have now been shared online so that any research group can 3D print one. The BLINC lab’s technology is used by companies to improve bionic limbs for patients.

The U of A is ranked second in the world for artificial intelligence research after Carnegie Mellon University, according to csrankings.org. Jonathon Schaeffer, U of A’s Dean of Science and an artificial intelligence researcher, says the program’s world-domination wasn’t planned.

“It would be nice to say that we had this plan to become a world powerhouse in artificial intelligence research,” he says. “But there really wasn’t any plan, it was just happenstance that good people arrived here.”

In the late 1970’s, the U of A had a couple artificial intelligence researchers. In the 1980’s, Schaeffer and a few more professors came onboard. He attributes the success of the program to good people acting as magnets to attract even more good people.

Back in 2002, four computing science professors applied for money from Alberta Ingenuity, a large provincial endowment aiming to diversify the economy. With the provincial funding, they created the Alberta Machine Intelligence Institute (previously called the Alberta Innovates Centre for Machine Learning). The funding drew Micahael Bowling, who did his PhD at Carnegie Mellon University, from the United States to Edmonton.

Bowling says that for a long time, he and other machine learning professors were frustrated that the students graduating out of the program had to leave to find jobs in AI. They went to the Bay Area to work for social media companies, Seattle to work for Amazon, or London to work for DeepMind.

[perfectpullquote align=”full” cite=”” link=”” color=”” class=”” size=””]“The obvious thing was for one of the large tech companies to realize the opportunity,” Bowling says. “That there was a large talent pool here that sat almost entirely untapped.”[/perfectpullquote]

Besides creating some of the world’s best poker-playing AI programs, Bowling and his team have been using Atari video games to test AI systems for over five years with the RLAI lab. Testing requires the AI system, which knows nothing about the game it’s about to play, to fiddle with the joystick, press the buttons, and figure out what it can and can’t control in its 1980’s-video-game environment.

Bowling is interested in AI systems that can learn and act intelligently in any environment. To test such a system, many different problems are needed. The idea to use Atari as a testing platform has now been picked up by more than 50 research groups, including DeepMind, who have shown for the first time that AI systems can perform better than amateur humans on over half of the Atari games.

In the game Yari’s Revenge, players have to retrieve a key by climbing ladders, running across moving platforms, and jumping over a boulder. The set of tasks to complete is hyper-specific, and any other behaviour ends in death. Games like this highlight the limits of current AI capabilities. AI systems don’t do well on games where getting points means putting together a long series of actions.

“It’s a very hard problem to figure out how you can explore that space to discover that there is a sequence of behaviour that can get you some points,” Bowling says.

It’s even more challenging for AI agents to learn games where the player gets negative points if they do anything but the sequence that will give them a high reward.

“The AI systems tend to get afraid of all the ways they could die so they don’t try to reach out and get the skills they need to get a higher score,” Bowling says.

These are problems Bowling’s team are trying to tackle.

[perfectpullquote align=”full” cite=”” link=”” color=”” class=”” size=””]“One of the things humans do so well is they can bring in all their experience from the past, whether it’s playing other video games or just from life, they can bring into various problems and I think that’s why they’re able to learn so quickly,” he says.[/perfectpullquote]

Another one of the PhD students Bowling supervises is Marlos Machado, who grew up in Brazil and met Bowling at a conference.

He immediately knew he wanted Bowling to be his supervisor because he found his talk on the Atari system fascinating.

Before Machado started his PhD, Bowling invited him to a board game night with the AI team. It highlighted to him how friendly and approachable the world-renowned professors in the department are.

“You look around and Rich Sutton, the celebrity who created reinforcement learning, is playing a game,” he says. “I realized that I found myself playing games with the guy whose textbook I used to read when I was in Brazil.”

This summer it was announced that DeepMind, an artificial intelligence research group that was bought by Google in 2014 for almost $650,000, would open its first office outside the United Kingdom here in Edmonton. Working with the U of A, the new office will be headed by Bowling, as well as Richard Sutton and Patrick Pilarski, who are all U of A computing science professors.

Schaeffer says he wasn’t surprised to hear DeepMind was coming to Edmonton. Their work uses a lot of reinforcement learning, which is the U of A’s area of expertise. Sutton is world-renowned as the father of reinforcement learning and has written the definitive textbook on the subject.

Other tech companies are following DeepMind’s lead, Schaeffer says, with the Royal Bank of Canada’s first research office outside of Toronto also coming to campus.

Bowling says that many of his students don’t want to leave Edmonton when they graduate because they enjoy the city and the research they’re doing. He’s excited to have an opportunity to have these strong AI researchers stay in Edmonton, and maybe bring back some people who have left the city.

Machado isn’t sure if he’ll end up staying in Edmonton and working at Deepmind with Bowling, but he says he’s happy to have it as an option.

“I remember talking to my wife two or three years ago about this and telling her that I would have to leave Edmonton because there were no jobs for me here,” he said. “And that’s changed. Now if you want to stay in Edmonton, there’s an amazing company with so many amazing people that you can work with.”